I'll be the first to admit that I'm not an eager adopter of Agile Methods, but I believe that there are some good ideas in the agile methods arena (and good ideas elsewhere as well). Based on some recent experiences I believe that agile can produce good long-lived embedded software ... but that it requires some things that aren't standard agile practices to get there.

Some folks use the "agile" banner as an excuse to have an ad hoc process. That's not going to work out so well for most embedded software. So we'll just move past that and assume that we're talking about an agile methods approach that uses a well defined process. (I didn't say "tons of paper" -- I said "well defined." That definition could be a single process flow chart on a whiteboard, some powerpoint slides, or the name of a book that is being followed.)

One of my major concerns with Agile as I have seen it practiced (and described by advocates) is that it is too light in Software Quality Assurance (SQA). This is by design -- as I understand it a primary point of Agile is to trust developers to do the right things, and audits aren't part of a typical trust picture. I've seen Agile do well, and I've seen Agile do not so well. But most interestingly, from what I've seen it is very difficult to tell if a gung-ho, competent Agile team that is saying they are doing all the right things is actually doing well or not in terms of resultant code quality. In other words, an agile team that says (and genuinely thinks) they are doing a good job might be producing great code or crummy code. And if nobody is there to find out which is happening, the team won't know the difference until it is much too late to fix things.

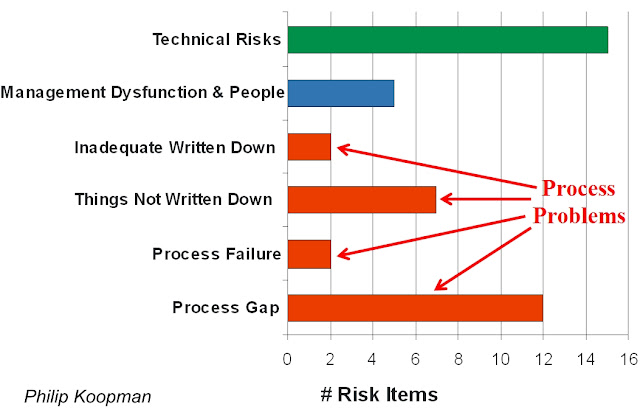

SQA is designed to make sure you're following the process you think you are following (whether agile or otherwise). Without an external check and balance it is all too easy to have a process failure. The failure could be skipped steps. It could be following the process too superficially, leading to ineffective efforts. It could be honest misunderstanding of the intent of the process rather than the letter of the process law. But in the end, I believe that you are taking a huge chance with any software process if you have no external check and balance

This isn't an issue of integrity, capability, or any other character flaw in developers. The simple fact is that developers and their management are paid to get the product done. While they can try their best to stay objective about the quality of their process, that isn't their primary job. The actual code is what they concentrate on, not the process. But everyone needs an external check and balance. And without one, upper management has no objective way to judge whether software development processes are working or broken (at least until some disaster happens, but then it's too late).

If I'm evaluating an Agile Methods group I ask the following questions: (1) Show me the written process you are following. (It can be an agile book; it can be a list of steps on a piece of paper; it can be just the Agile Manifesto. It can be anything.) (2) Explain to me how an external person can tell whether you are actually following that process. (3) Explain to me how an external person can tell that the process is producing the quality of software you want. I am flexible in terms of answers that are satisfactory, and none of these things preclude you from using an Agile Methods approach. But questions (2) and (3) typically aren't part of defined Agile Method approaches.

You'd be surprised how hard it is to answer those questions for many agile projects with an answer that amounts to something other than "trust us, we're professionals." I'm a professional too, but I'm not perfect. Nobody is. And I have learned in many different scenarios that everyone needs an external mechanism to make sure they are on track. Sometimes the answer is more or less "Agile always works." It doesn't just automatically work. Nothing does.

Sometimes an agile team works really well because of a single individual leader who makes it work. That's great, and it can be very effective. But in a year, or five, or ten, when that leader is promoted, wins the lottery, retires, or otherwise leaves the scene, you are at very high risk of having the team slip into a situation that isn't so good. So while getting a good leader to get things rolling however it can be done is great, a truly excellent leader will also plan for the day when (s)he isn't there. And that means establishing both a defined process and a quality check-and-balance on that process.

In my mind the above three questions capture the essence of SQA in a practical sense for most embedded system software projects. If you are happy with your answers for these questions, then Agile might work well for you. But if you simply blow off those topics and skimp on SQA, you are increasing your risk. And generally it's the kind of risk that eventually bites you.